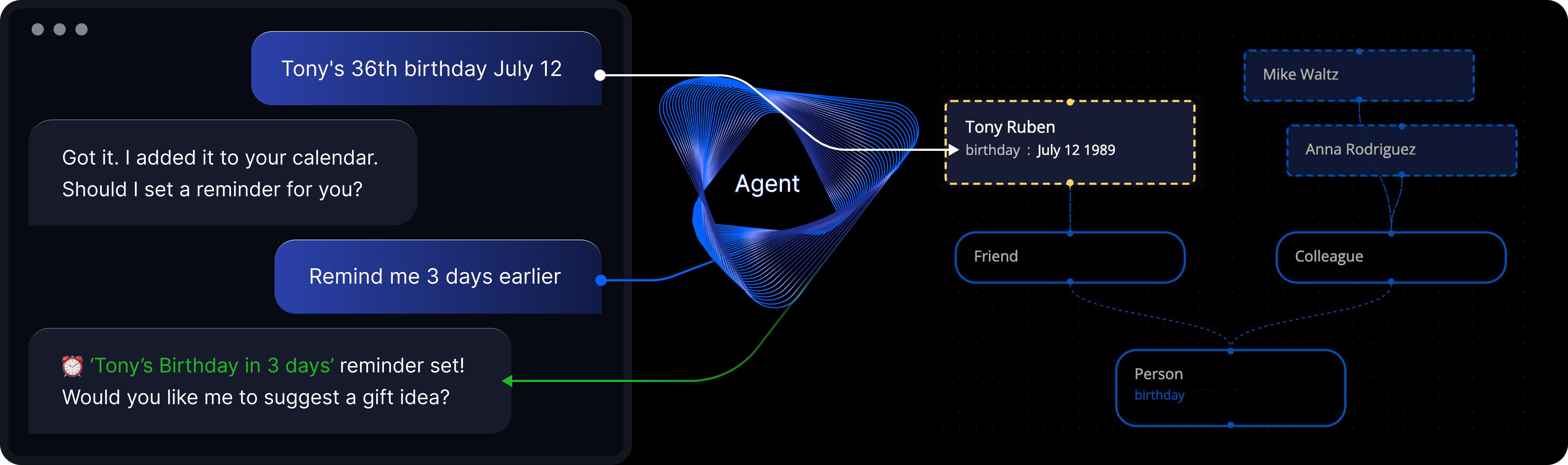

Dynamic Memory: Common AI agents' architectures rely on rigid memory APIs with fixed data structures, which limits scalability — we allow agents to dynamically create, connect, and reason over data within an expanded semantic graph.

Entity Extraction: By structuring information during conversation, the system turns mentions of entities of the real world — people, books, or tasks — into evolving entities it can remember and act upon.

Context Outside LLM: Instead of stuffing all memory into the LLM’s context window, we store it in a graph — making the system more reliable, interpretable, and less prone to hallucinations.

Zero-Friction Input: Users shouldn’t have to think about organizing data — the agent handles it automatically, reducing friction and cognitive overhead.